I am currently a Postdoctoral Researcher at the Singapore University of Technology and Design (SUTD), where I collaborate with Prof. Immanuel Koh. I received my Ph.D. from Beijing University of Chemical Technology, under the supervision of Prof. Fan Zhang and Prof. Wei Hu.

Also, I was a visiting student at the Vision and Learning Group (VLG) at SUTD, where I had a wonderful and productive experience working with Prof. Jun Liu since March 2023. Following that, I conducted research at Microsoft Research Asia, collaborating with Yangyu Huang.

My research interests primarily lie in Large Multimodal Models, AI Agents, and Visual Perception and Reasoning in Open-world.

🔥 News

- 2025.05: 🎉 MMLU_CF accept to ACL 2025 main conference.

- 2025.02: 🎉 One paper accept to CVPR 2025.

- 2025.01: 🎉 GaussianBlock accept to ICLR 2025, see you at Singapore.

- 2024.07: 🎉 LTRL accept to ECCV 2024, Oral (Oral Paper Rate: 2.1% (188/8585).

- 2024.04: 🏅 LTGC is selected for oral presentation at CVPR 2024 (Oral Paper Rate: 0.78% (90/11532), Acceptance Rate: 23.6% (2719/11532)).

📖 Experiences

- 2024.06 - 2025.01, MSRA

- Topic: Contamination-free LLMs Benchmark

- 2023.03 - 2024.03, Singapore University of Technology and Design, Visiting Student.

- Topic: Long-Tail Recognition with LLMs

- 2019.09 - 2024.06, Beijing University of Chemical Technology, Joint Master’s and Ph.D.

- Topic: Visual Recognition in the Open World

📝 Publications (* Equal Contribution)

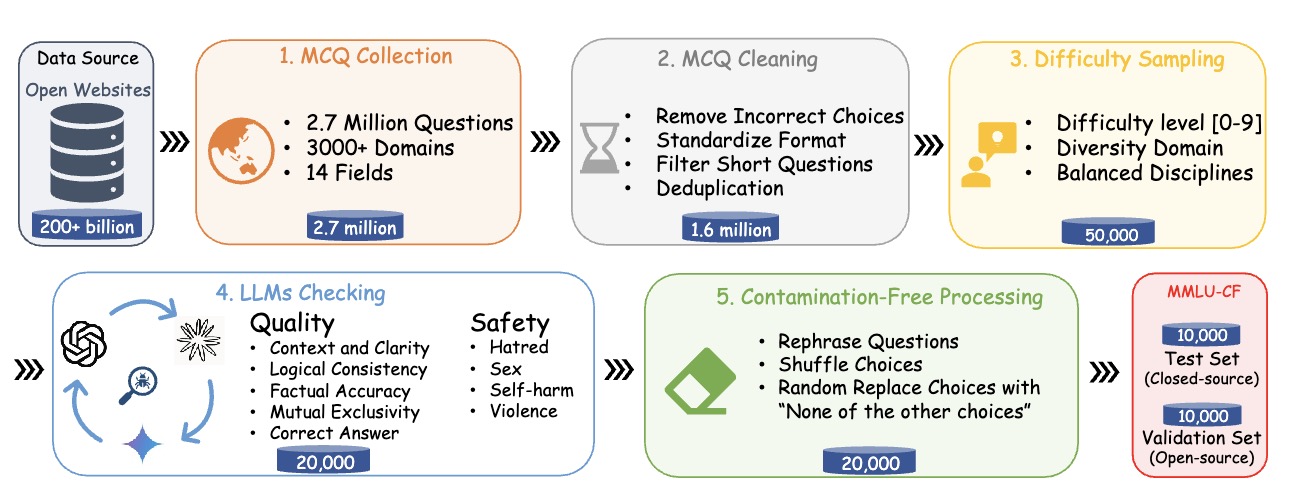

MMLU-CF: A Contamination-free Multi-task Language Understanding Benchmark

MMLU-CF is a contamination-free and more challenging multiple-choice question benchmark.

Qihao Zhao, Yangyu Huang, Tengchao Lv, Lei Cui, Qinzheng Sun, Shaoguang Mao, Xin Zhang, Ying Xin, Qiufeng Yin, Scarlett Li, Furu Wei

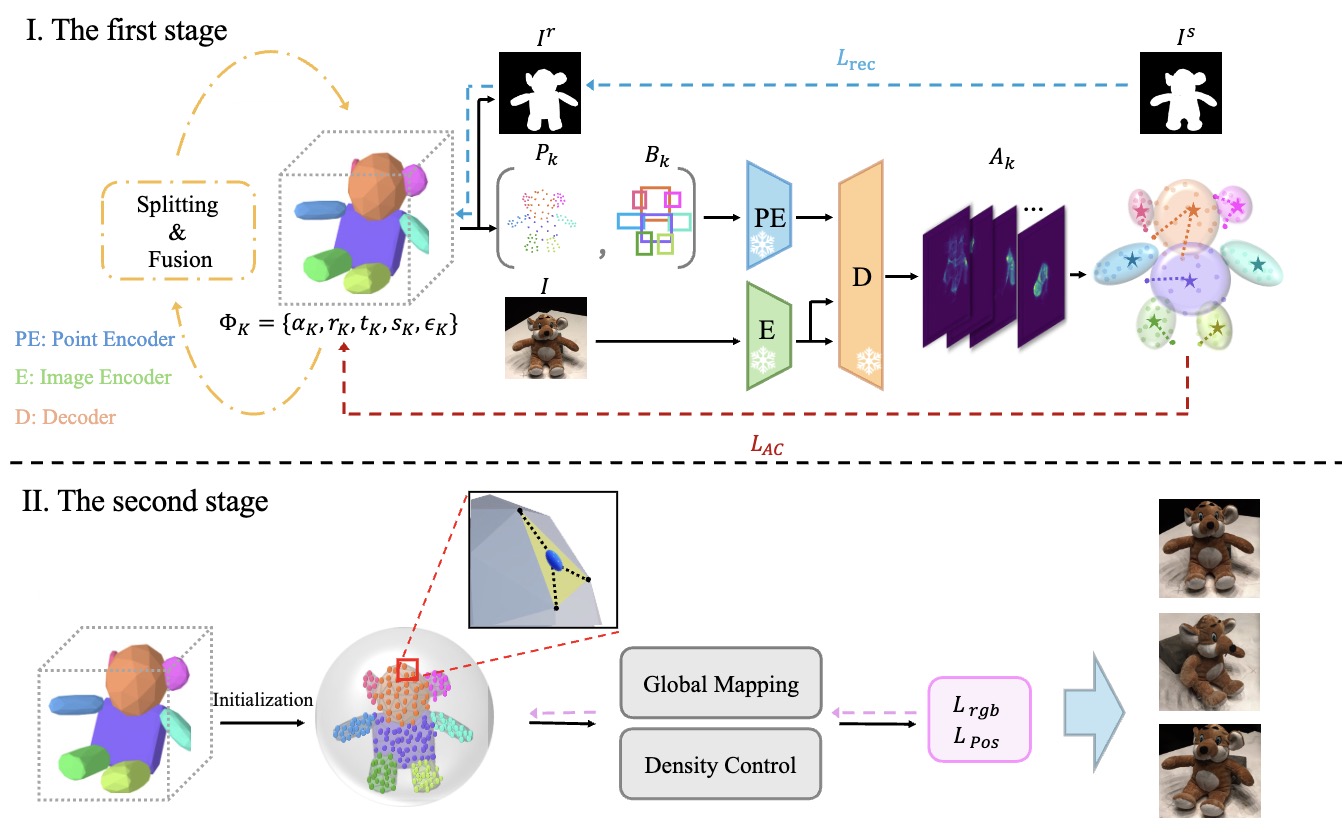

GaussianBlock: Building Part-Aware Compositional and Editable 3D Scene by Primitives and Gaussians

GaussianBlock introduces a hybrid representation that leverages the advantages of both primitives, known for their flexible actionability and editability, and 3D Gaussians, which excel in reconstruction quality.

Shuyi Jiang, Qihao Zhao, Hossein Rahmani, De Wen Soh, Jun Liu, Na Zhao

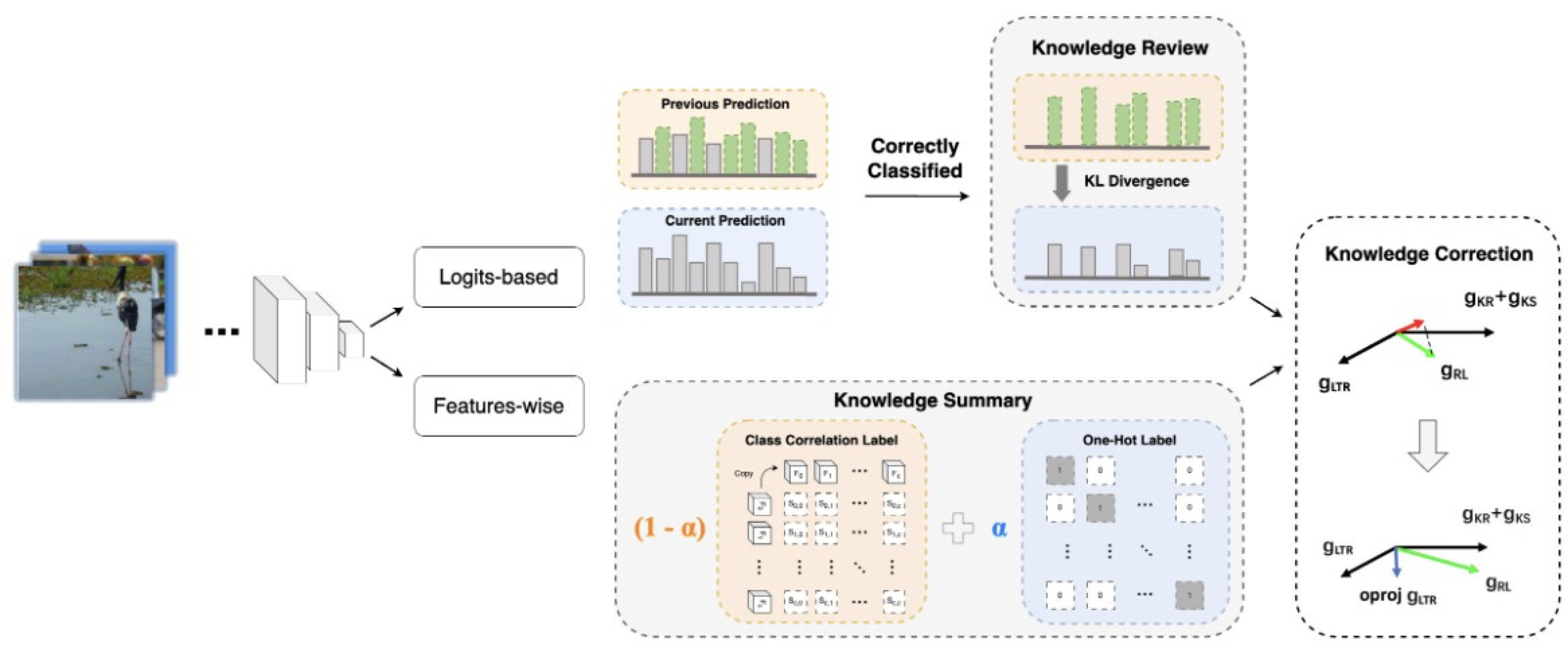

LTRL: Boosting Long-tail Recognition via Reflective Learning

Oral, Top 2.1%

Reflective Learning, a plug-and-play method, boosts long-tail recognition by mimicking human thinking.

Qihao Zhao *, Yalun Dai *, Shen Lin, Wei Hu, Fan Zhang, Jun Liu

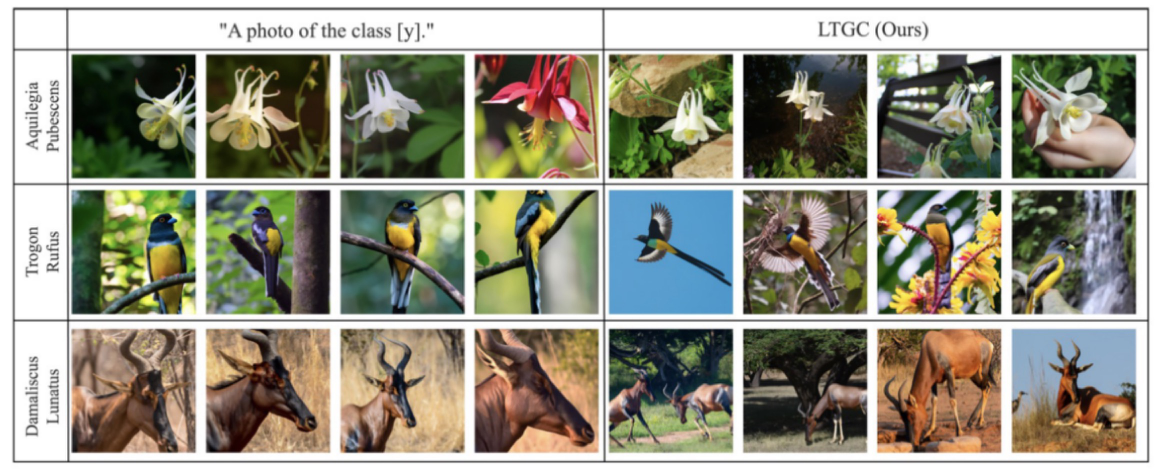

LTGC: Long-tail Recognition via Leveraging LLMs-driven Generated Content

Oral, Top 0.78%

A generative and tuning framework leveraging the knowledge of large language models for long-tail recognition.

Qihao Zhao *, Yalun Dai *, Hao Li, Wei Hu, Fan Zhang, Jun Liu

Project

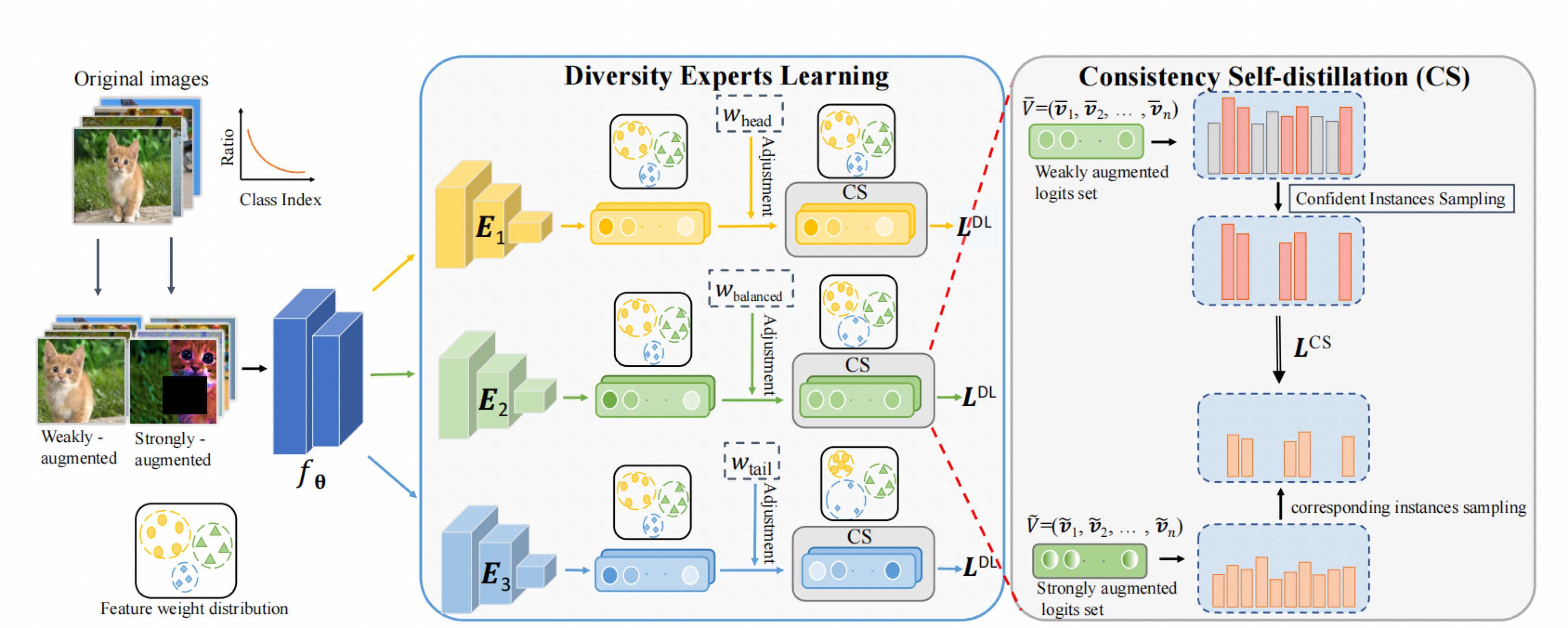

MDCS: More Diverse Experts with Consistency Self-distillation for Long-tailed Recognition

A long-tail learning method for maximizing expert recognition diversity with minimum model variance.

Qihao Zhao, Chen Jiang, Wei Hu, Fan Zhang, Jun Liu

Code

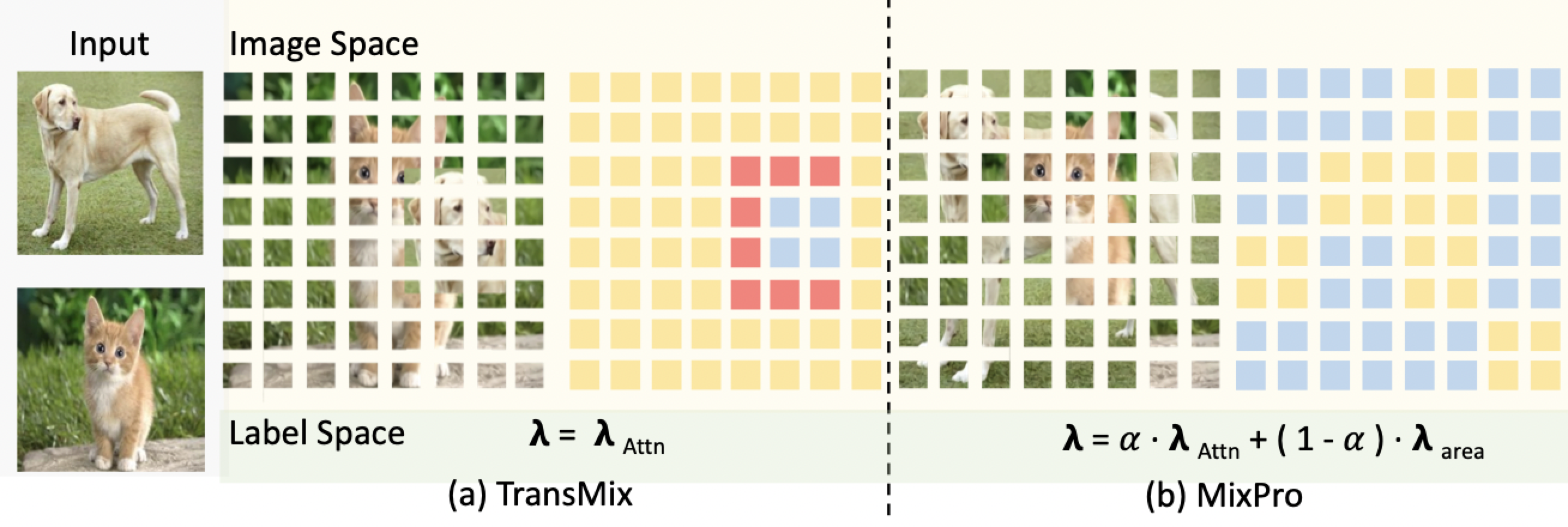

MixPro: Data Augmentation with MaskMix and Progressive Attention Labeling for Vision Transformer

A novel data augmentation method designed for ViTs considering global information mixture and label space re-weighting.

Qihao Zhao, Yangyu Huang, Wei Hu, Fan Zhang, Jun Liu

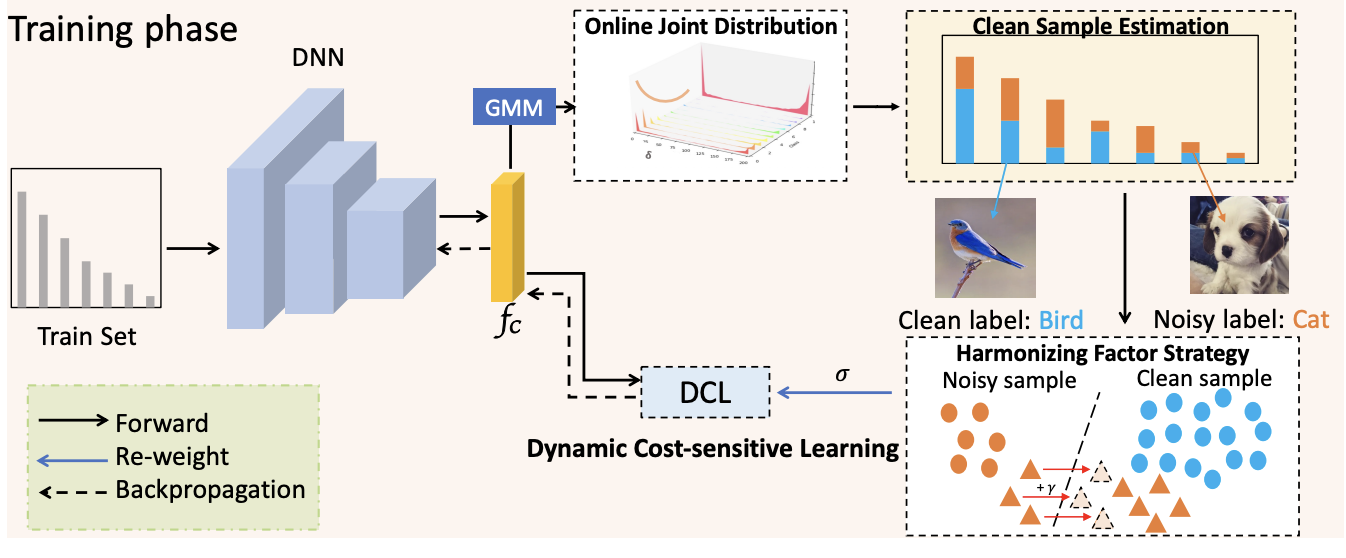

A novel framework to address the challenge of noisy labels under long-tailed distribution.

Qihao Zhao, Fan Zhang, Wei Hu, Songhe Feng, Jun Liu

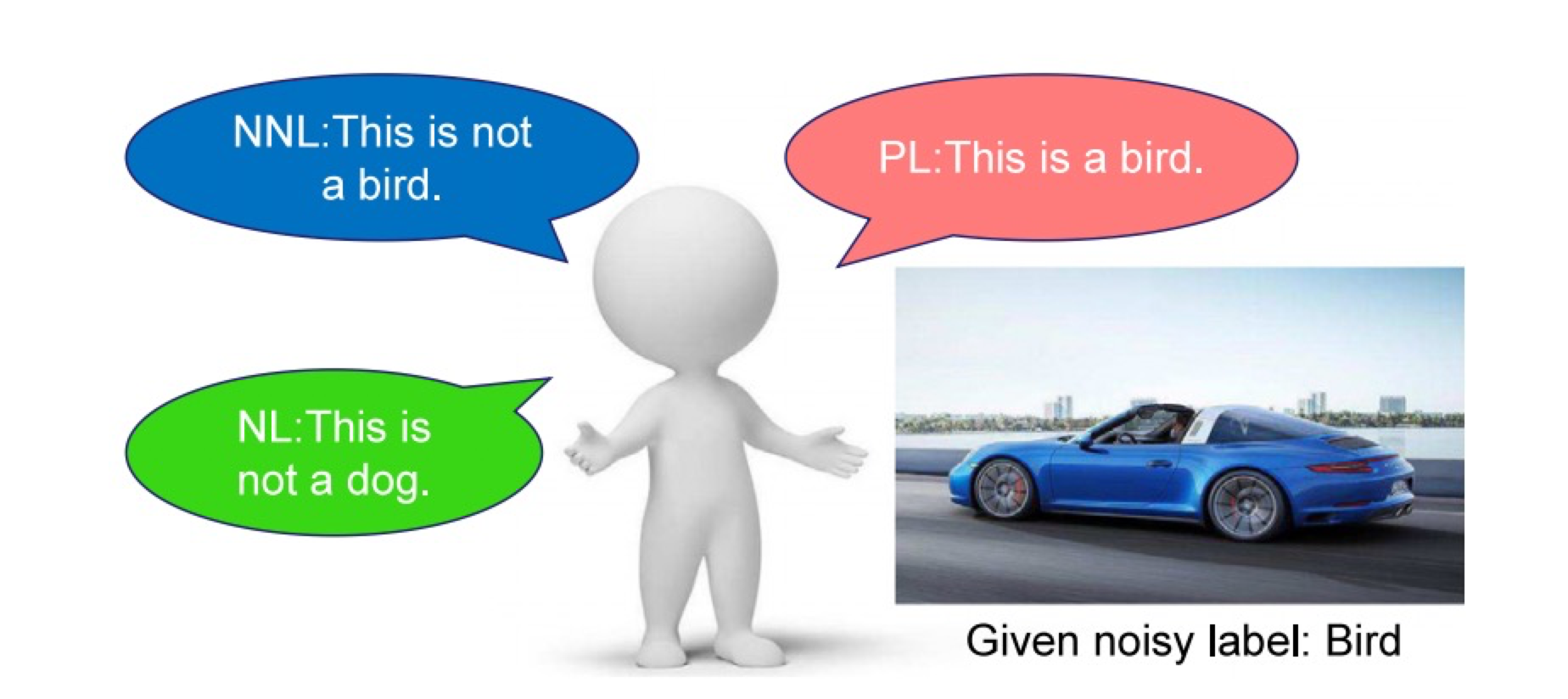

P-DIFF+: Improving Learning Classifier with Noisy Labels by Noisy Negative Learning Loss

A novel loss function, that mining knowledge from noisy samples to improve the robustness of models.

Qihao Zhao, Wei Hu, Yangyu Huang, Fan Zhang

💻 Service

- Co-suprivised Students:

- Yalun Dai (CVPR x 1, ECCV x 1, From BUCT, Now at NTU)

- Chen Jiang (ICCV x 1,From BUCT, Now at McGill University)

- Reviewer: CVPR, NeurIPS, ICLR, T-CSVT, ICCV, ACM MM